Community

Learnings from 800+ GenAI and ML use cases

contents

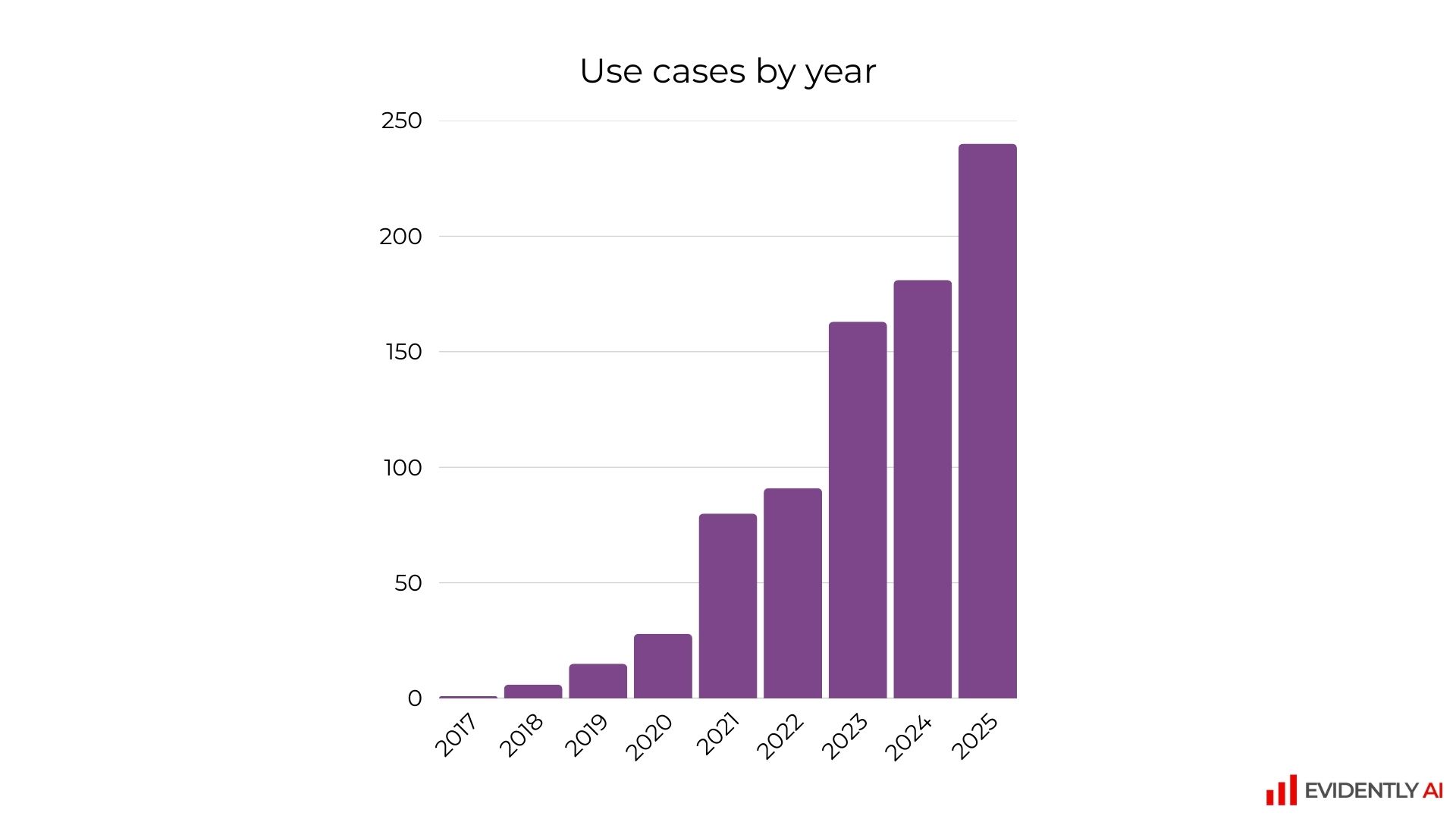

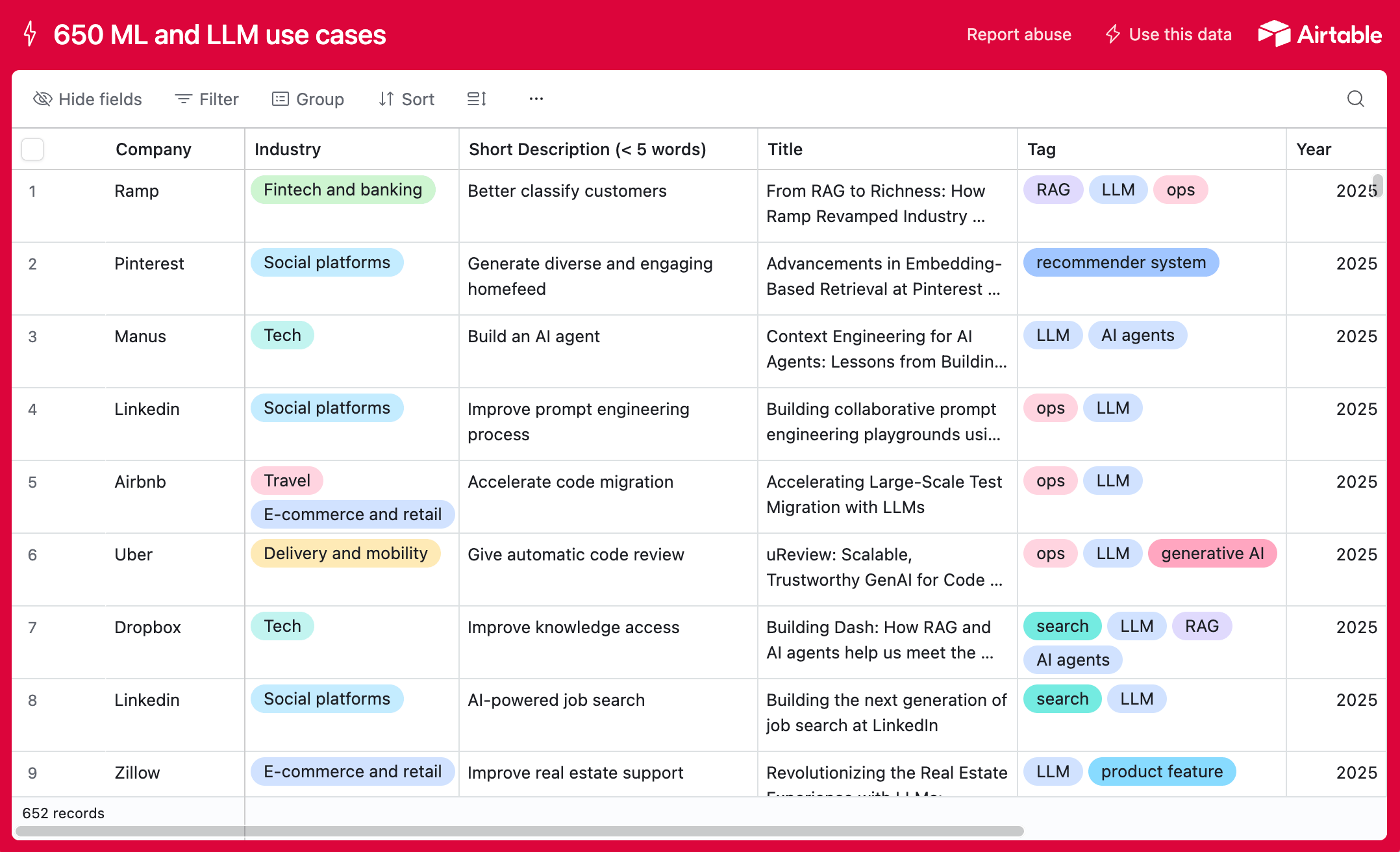

Since 2023, we’ve been continuously curating and updating a database of real-world AI and ML use cases. Today, it includes 800+ production GenAI and ML applications from 185 companies, spanning 2017 to 2025.

These are not demos or experiments – they are AI systems running in production, powering products, operations, and decision-making workflows.

With this breadth of examples, we stepped back to ask a broader question: How are companies actually using AI in production, and how has that evolved over time?

Below, we highlight some of the key patterns we found.

💻 You can check the full database here.

Database composition

The database contains 805 GenAI and ML use cases, sourced from blog posts, papers, and articles that describe in-house AI systems. While the coverage spans 2017-2025, the majority of examples come from 2023-2025, reflecting the recent acceleration in production AI adoption.

We deliberately focused on systems built internally by companies – not solutions sold or implemented by vendors. This dataset does not include any “case studies” published by vendors or promotional announcements that discuss the existence of a new AI feature – we focused on technology blogs, conference videos, and occasional papers that outline the implementation details.

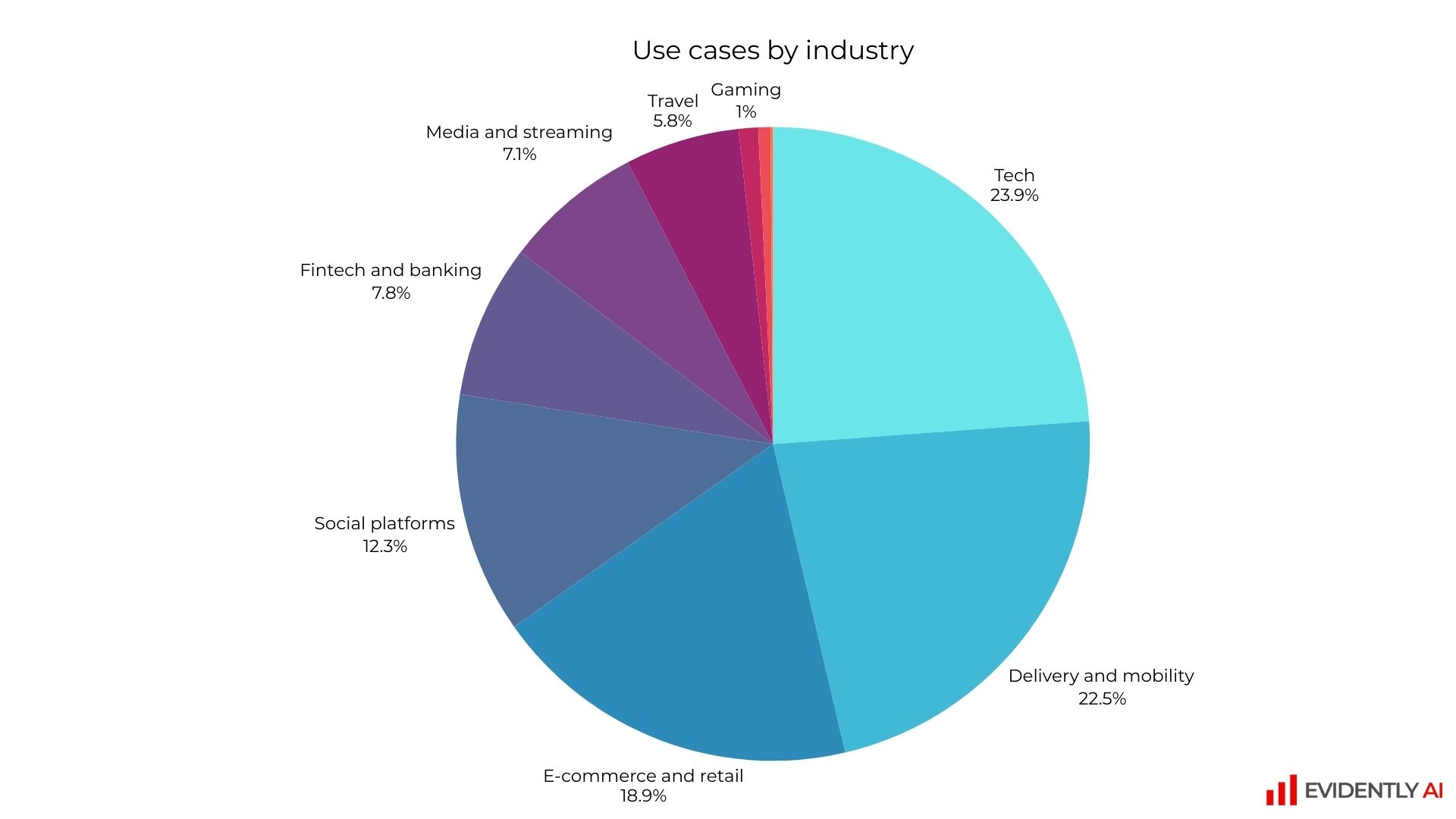

In total, the dataset covers use cases from 185 companies across a wide range of industries:

Use case taxonomy

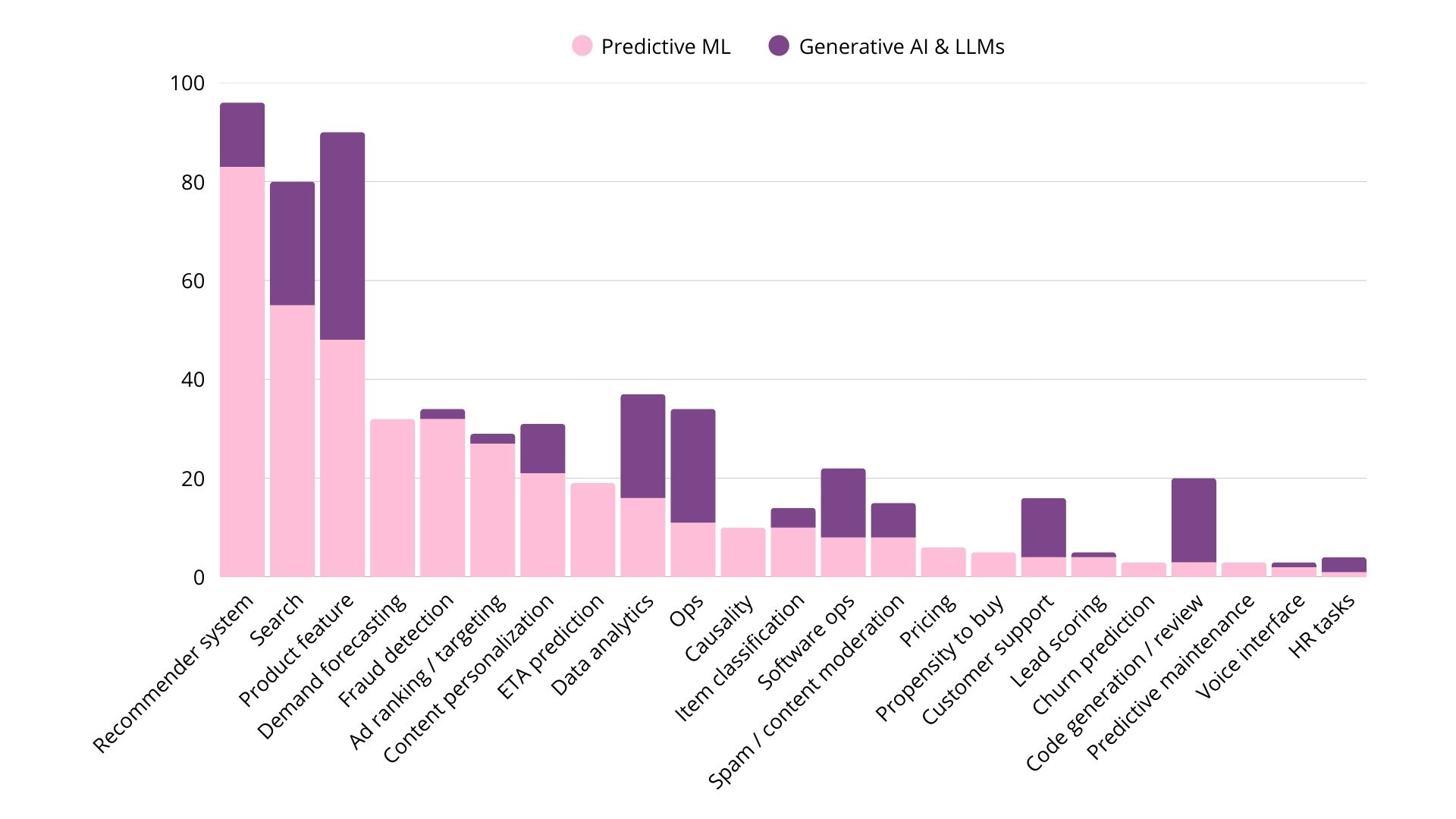

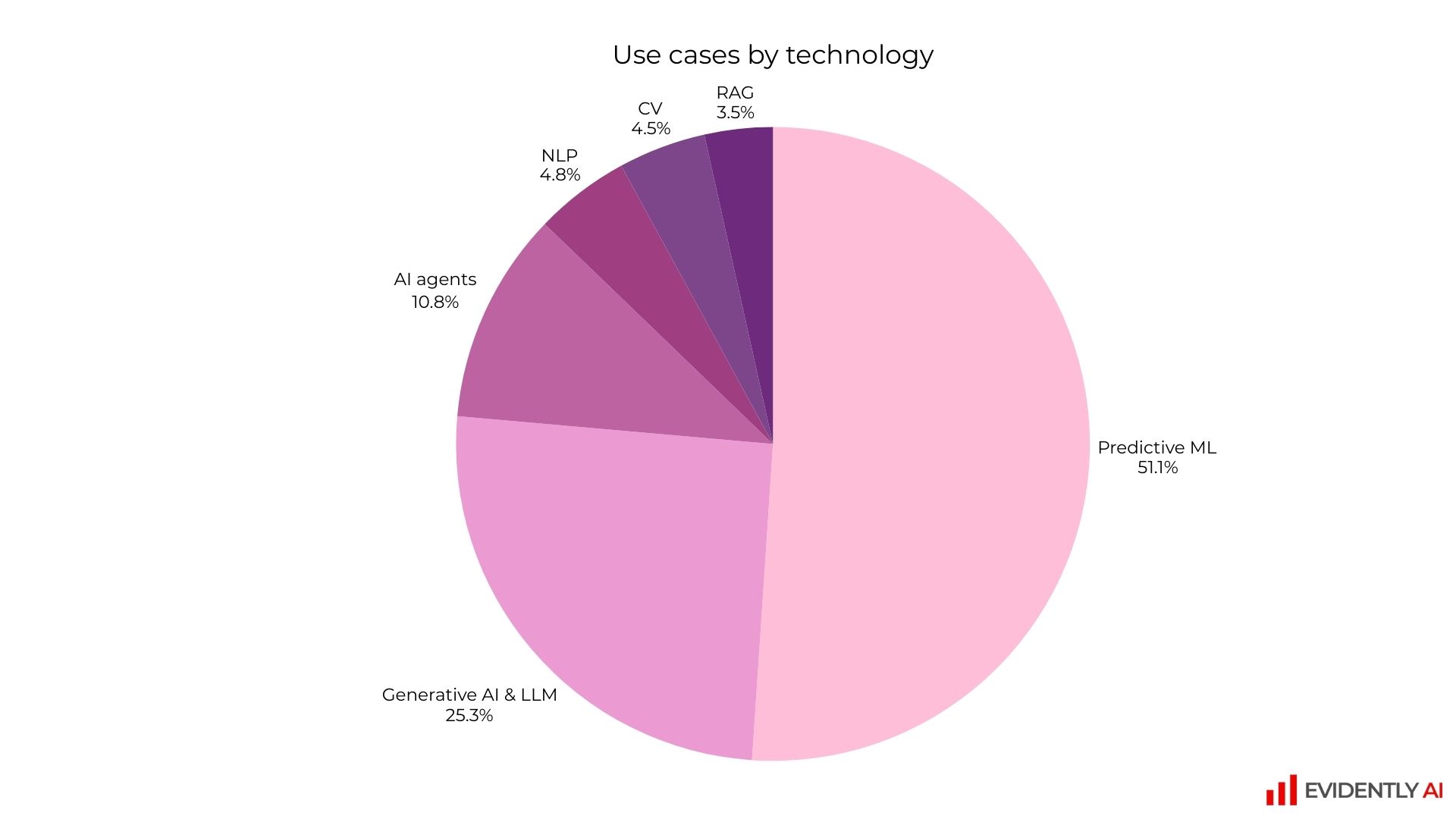

We grouped use cases by underlying technology. Predictive ML (together with “classic” computer vision and NLP applications) still accounts for about 60% of all examples. However, various GenAI systems already represent 40% of production use cases, despite being only a few years old as a mainstream production technology.

For convenience, we singled out AI agents and Retrieval-Augmented Generation (RAG) systems as a separate category tag, reflecting their growing visibility in real-world applications.

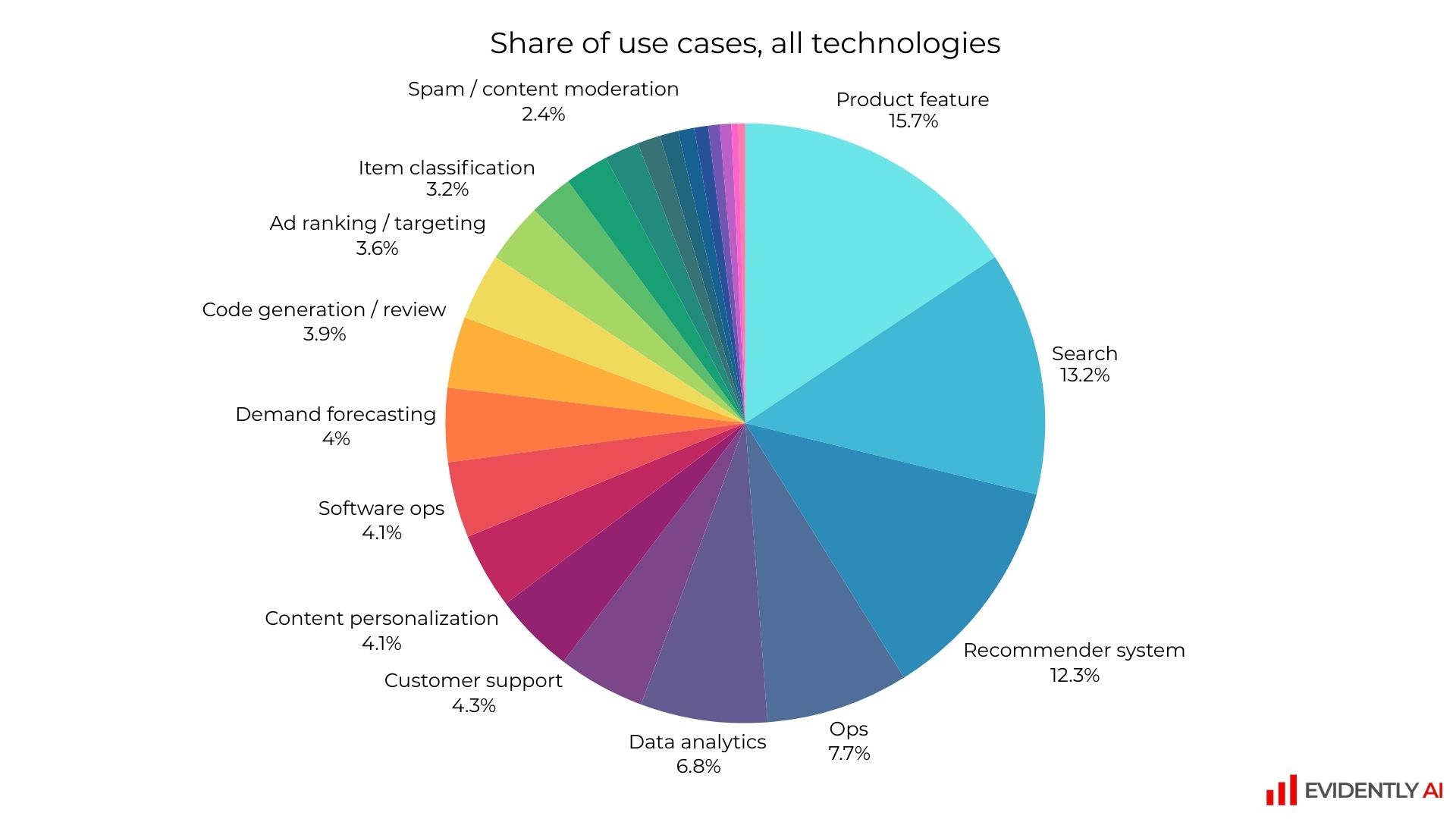

In parallel, we grouped use cases by recurring application types, such as demand forecasting, fraud detection, content personalization, and others.

Note: Since the previous update, we have refined the taxonomy to make application types more specific. For example, data analytics, code generation, and software operations were split out from the broader “ops” category.

While no taxonomy is perfect, this structure reveals several clear and consistent patterns. Let’s walk through them.

Most popular use cases

User-facing AI leads the way.

Applications where AI powers a specific user-facing feature are labeled as “product feature.” This category spans everything from grammar correction and outfit generation to coding assistance. In all these cases, AI is built directly into the core product the company develops, as opposed to supporting some internal business process.

Creating customer “aha moments” has always been a priority – but the democratization of GenAI and LLMs has made it significantly easier to design and deploy compelling AI-powered features directly into products.

Examples:

- Grammarly’s on-device writing assistant.

- Doordash automatically generates menu item descriptions for restaurants.

- The New York Times' handwriting recognition for its crossword puzzles.

A lot of AI value is created behind the scenes.

Still, companies continue to invest heavily in optimizing high-volume internal workflows. Here, AI often powers use cases such as data analytics and software testing (grouped under “ops” in our taxonomy). While the applications vary depending on the specifics of the business, the objective remains consistent: to reduce costs and effort associated with repetitive processes.

For example:

- Agoda uses GenAI to resolve security incidents faster.

- GoDaddy analyzes customer support transcripts.

- Plaid automates data labeling.

Search and recommender systems remain core drivers.

Search and RecSys together account for roughly a quarter of all analyzed use cases. E-commerce and consumer platforms were early adopters, using AI to surface the right products or content at the right time and help with product discovery. Improved search, better targeting, and increasingly sophisticated personalization remain central themes even in the GenAI world:

- Delivery Hero builds multilingual search with few-shot LLM translations.

- Target uses LLMs to improve recommendations for related accessories.

- Wayfair uses vision LLMs to identify stylistic compatibility between recommended products.

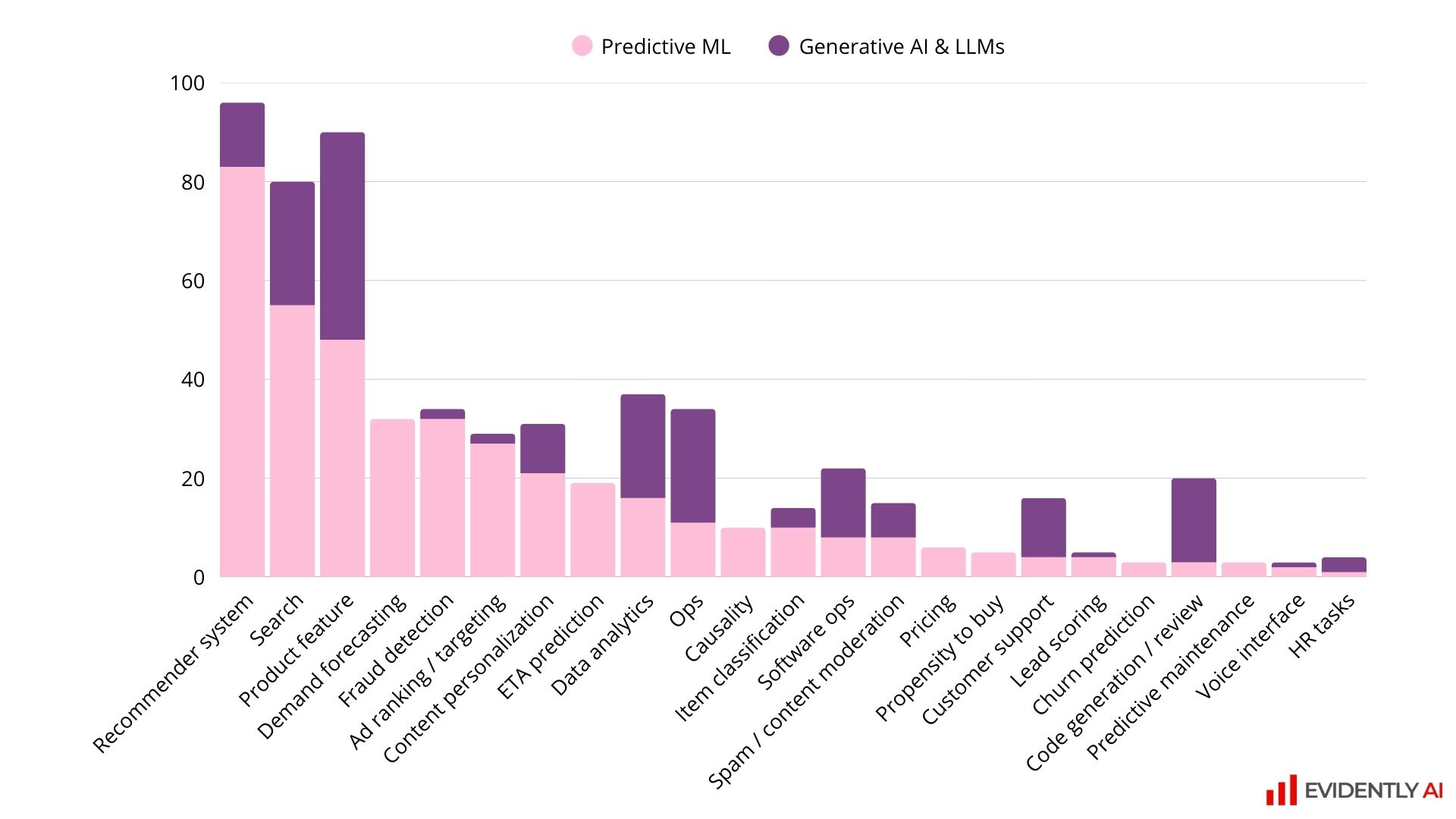

Predictive ML vs GenAI use cases

One striking observation is how consistent the application types remain even as the underlying technology shifts from predictive ML to GenAI. These use cases we just discussed – search, recommendations, ops, and customer-facing product features continue to dominate – now enhanced by generative capabilities and LLMs’ stronger semantic understanding.

How use cases evolve over time

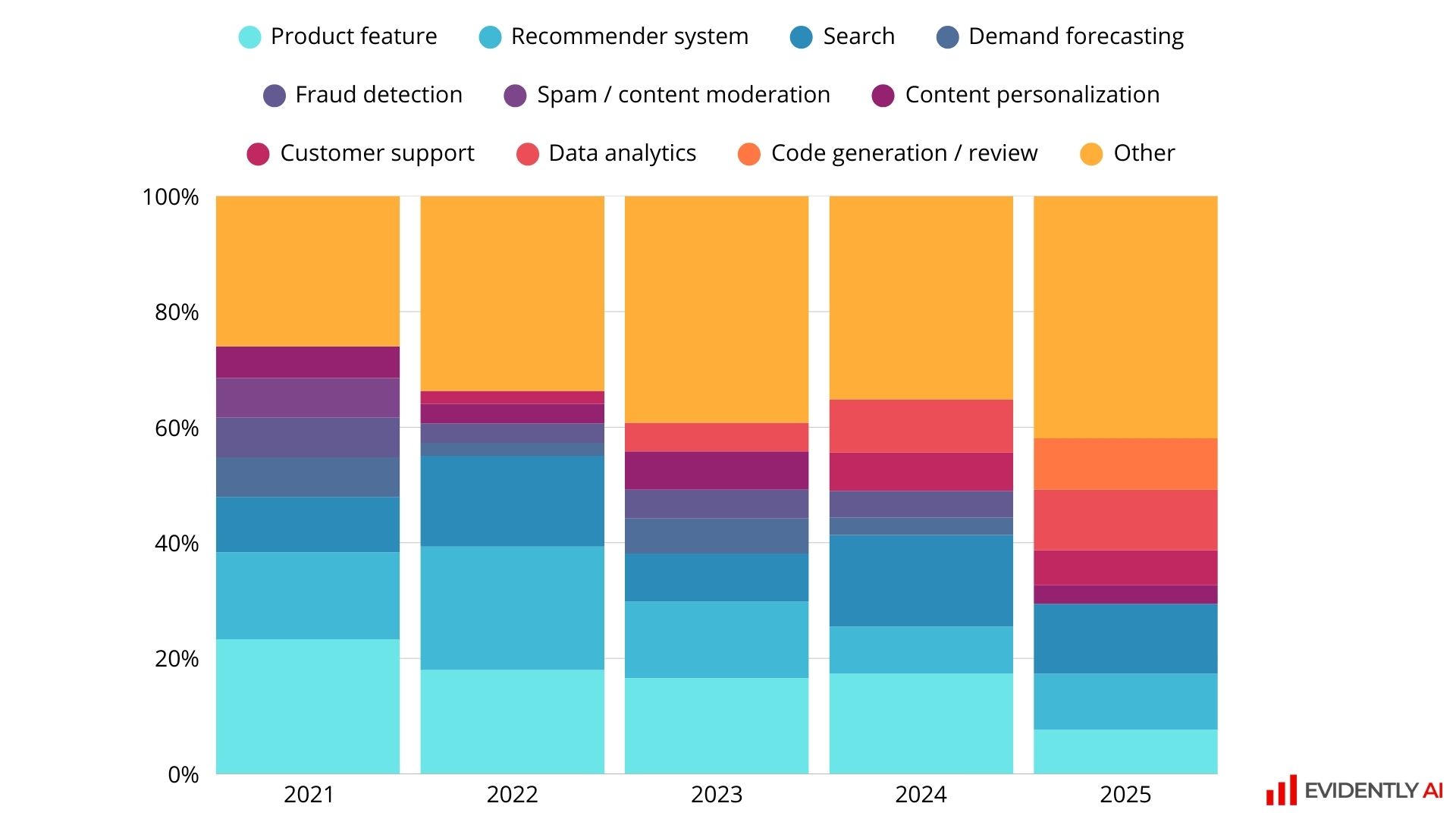

We also tracked how use case popularity has changed over time. Below are the top seven use cases across 2021–2025.

Search and recommendations remain evergreen.

Across every year and every technology wave, search and recommender systems remain among the top three AI applications.

Code generation and data analytics are the new defaults

With the rise of LLMs, data analytics – such as Text-to-SQL or automated analytical reporting – quickly became a common first use case. Code generation has emerged as another primary application by 2025. Customer support, powered mainly by RAG-based chatbots, has also grown significantly.

By contrast, more “classical” predictive ML applications like demand forecasting, fraud detection, or spam moderation still exist – but companies write about them far less frequently today.

Examples of code generation and analytics use cases include:

- Delivery Hero helps extract and visualize data with no code.

- Grab generates analytical reports using LLMs.

- Intuit launches coding assistant for developer productivity.

- ASOS adopts AI-powered vibe coding.

AI agents and RAG

We analyzed AI agents and RAG systems as distinct technologies under the GenAI umbrella. Together, they account for roughly 15% of all documented use cases.

The most common agentic applications include various workflow optimizations, such as customer support, analytical tasks, and coding tasks, as well as complex search. For example:

- Uber uses an enhanced Agentic RAG (EAg-RAG) to improve the quality of answers provided by its on-call copilot, Genie.

- Cubic created an AI code review agent.

- Booking’s AI agent suggests relevant responses to customer inquiries.

- Ramp built an agentic data analyst.

For RAG systems, customer support is the dominant use case. Examples:

- Doordash built an LLM-based support chatbot.

- Thomson Reuters helps customer support executives quickly access the most relevant information.

- Coinbase builds a conversational support chatbot.

A large number of companies also build general-purpose AI assistant chatbots to improve knowledge access for employees: see examples from Amplitude or Grab.

💻 More agentic AI and RAG examples.

Summing up

Across 800+ production use cases, a few clear patterns emerge:

- AI is firmly embedded in both user-facing features and backend operations.

- GenAI is rapidly scaling alongside predictive ML, often powering the same applications with new capabilities layered in.

- Search and recommender systems remain the most “evergreen” AI application.

- RAG and AI agents are gaining traction in support, analytics, and complex workflows.

.svg)

.svg)

.svg)

.svg)

.svg)

.svg)

.svg)

.svg)

.svg)

.svg)

.svg)

.svg)

-min.png)

.svg)

.svg)